Client state management, sync engines, and the future of application development

01/19/2025

Modern application development is rife with incidental complexity. LLM-driven coding helps paper over this complexity, but is ultimately a distraction from our understanding that we're building on the wrong primitives.

Building robust applications requires rich type systems, polymorphism, fine-grained mutations, authentication, authorization, and client data synchronization. Our database systems were conceived of before we knew we needed these capabilities, so we came up with the three-tier architecture and split these capabilities across the logic tier and the client tier.

This split comes with enormous costs. Building even a simple todo application requires hand-crafting an API layer, hand-crafting data synchronization with that API layer on the client, and then building UI components that are completely coupled to our particular API layer. What if we could compress the three-tier architecture into a single rich programming environment, avoid all this undifferentiated work, and make our software interoperable and composable?

Palantir's Foundry platform is an imperfect but underrated existence proof of this idea. Palantir's CTO had a good thread about this a couple of years ago.

3/ 12 @petewilz on Why is Ontology a category-defining piece of technology? Traditionally, software systems have gravitated towards a partitioned model for data - a physical model for the database, a logical model for applications, and a conceptual model for users.

Between Foundry and other projects across the tech ecosystem, all the ideas are there for us to build an open-standards application development platform that dramatically lowers the marginal cost of software creation. Let's work backwards from the client.

Traditional client state management

Any data-intensive web or mobile application with a central server has the job of synchronizing data while the user interacts with it. Reframed, every application is actually a distributed system. The typical approach to this synchronization problem usually boils down to some version of:

- Fetch data from an API (REST, GraphQL, etc.), assuming little to nothing about the structure of the underlying data besides having a notion of resources with unique keys

- Cache resources locally in a key/value store

- Give the developer some facilities to imperatively update or invalidate the cache in response to user actions and subsequent server responses

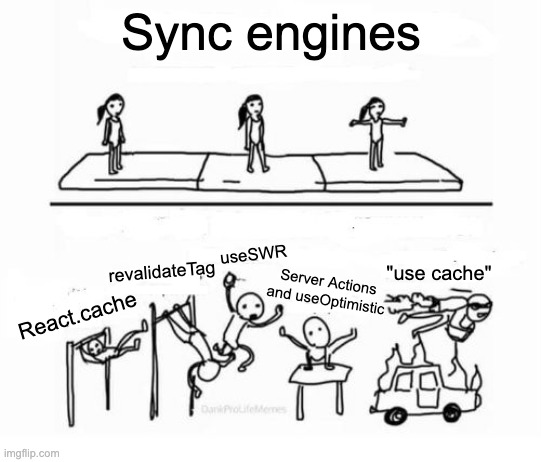

Web frameworks like Next.js do a version of this. Popular client libraries like useSWR and React Query do a version of this. The Relay and Apollo GraphQL frameworks do a slightly enlightened version of this, relying on layering a Global Object Identification Specification on top of vanilla GraphQL.

This approach is bad! Cache invalidation is typically considered one of the two hardest problems in computer science, so we should solve it at the system level rather than leaving developers to solve it themselves.

Sync engines

"And to put it bluntly, they are all in the same stage of elaborately learned superstition as medical science was early in the last century, when physicians put their faith in bloodletting, to draw out the evil humors which were believed to cause disease. With bloodletting, it took years of learning to know precisely which veins, by what rituals, were to be opened for what symptoms. A superstructure of technical complication was erected in such deadpan detail that the literature still sounds almost plausible."

-- Jane Jacobs, The Death and Life of Great American Cities

What we really want is declarative data synchronization. React lets us describe how we want our UI to look as a function of our data, and it uses a reconciliation algorithm to efficiently coordinate updates to our UI. In the same way, we should be able to declare the data our UI needs, and a sync engine should work out how to efficiently fetch that data and keep it up-to-date in response to both local and remote changes.

There's a growing movement closely related to this idea, and the most promising framework in development is Zero. You should look at their docs, but I'm reproducing their sample code here.

function Playlist({ id }: { id: string }) {

// This usually resolves *instantly*, and updates reactively

// as server data changes. Just wire it directly to your UI –

// no HTTP APIs, no state management no realtime goop.

const tracks = useQuery(

zero.query.playlist

.related("tracks", (track) =>

track.related("album").related("artist").orderBy("playcount", "asc")

)

.where("id", id)

);

const onStar = (id: string, starred: boolean) => {

zero.track.update({

id,

starred,

});

};

return (

<div>

{tracks.map((track) => (

<TrackRow track={track} onStar={onStar} />

))}

</div>

);

}Zero allows you to declare data requirements for a particular component, and it will automatically keep the data up-to-date as it changes both locally and remotely. It's built on a theory of "incremental view maintenance" (you can find the main paper Zero takes inspiration from here) or IVM, which answers the question of how to update the results for queries after data is updated more efficiently than just re-running the queries.

Elements of a rich programming environment

Imagine if I could write a generic UI component with data dependencies and mutations, freely compose it with other components, and everything just worked. Basically, what if we could bring the same composability of React to the data layer of our UI components?

As a concrete example let's consider what it would look to replace a SaaS app like Calendly. Calendly lets you embed their scheduling component with an iFrame, and gives you a callback for when an event is booked. The resulting booking data lives in Calendly, and the developer is left to cobble everything together on the backend and the frontend. We should be able to replace this with an abstract scheduling component -- ideally built on a standard scheduling interface -- that loads data for slots that can be scheduled and performs mutations for bookings. When I perform a booking, other parts of my app that might use that data (say an upcoming appointments list) should automatically update, respecting any filters or sorts I have applied.

Between ideas from Zero and Foundry (via OSDK and Workshop) and GraphQL (via Relay) we're circling around the solution. There are four elements in particular to focus on: read composability, write synchronization, rich semantic modeling, and rich querying.

Read composability

For reads to compose, components need to be able to declare their data requirements without being responsible for actually fetching, so we can bundle up the data requirements for an entire UI tree into a single efficient query. Relay has a good video about this that is really worth watching, and a good blog post. I'll reproduce the key parts of the blog post here.

In component-based UI systems such as React, one important decision to make is where in your UI tree you fetch data. While data fetching can be done at any point in the UI tree, in order to understand the tradeoffs at play, let’s consider the two extremes:

- Leaf node: Fetch data directly within each component that uses data

- Root node: Fetch all data at the root of your UI and thread it down to leaf nodes using prop drilling

Relay leverages GraphQL fragments and a compiler build step to offer a more optimal alternative. In an app that uses Relay, each component defines a GraphQL fragment which declares the data that it needs. This includes both the concrete values the component will render as well as the fragments (referenced by name) of each direct child component it will render.

At build time, the Relay compiler collects these fragments and builds a single query for each root node in your application. Let’s look at how this approach plays out for each of the dimensions described above:

- ✅ Loading experience - The compiler generated query fetches all data needed for the surface in a single roundtrip

- ✅ Suspense cascades - Since all data is fetched in a single request, we only suspend once, and it’s right at the root of the tree

- ✅ Composability - Adding/removing data from a component, including the fragment data needed to render a child component, can be done locally within a single component. The compiler takes care of updating all impacted root queries

- ✅ Granular updates - Because each component defines a fragment, Relay knows exactly which data is consumed by each component. This lets relay perform optimal updates where the minimal set of components are rerendered when data changes

The reason this works in Relay is that GraphQL is a composable query language where the results match the shape of the query. SQL doesn't have this property -- it gives you flat results that don't match the shape of your query. Palantir's Object Set API has the same problem, which is why OSDK queries aren't composable. Workshop (Foundry's no-code app builder) is built on the same API, which is part of why Workshop apps have crazy request waterfalls. Zero has a custom query language called ZQL that is very intentionally composable.

Besides query composability, there are some framework features also needed for true read composability. One is the ability to dynamically choose which properties to load for generic components like Workshop's Object Table. Relay doesn't allow this because its compiler wants query definitions to be totally static aside from GraphQL variables for performance and security reasons (e.g. so it can support persisted queries). These decisions make sense as defaults for Facebook, but not for most apps. Zero collects subqueries into full queries more simply without a compiler, which should allow for dynamic queries. This is on net probably the best solution, and Zero could probably layer in a compiler later for sites that need to be hyper-optimized like Facebook.

Another feature needed is support for React Suspense. In this context, Suspense lets us decouple loading states from components, so if I'm pulling in multiple components I maybe didn't write into my app, I can compose them and provide a single loading state that wraps them rather than relying on their individual pre-baked loading states and ending up with inconsistent UI that I don't want in my app.

import { SchedulingComponent } from "scheduling-library";

import { CalendarComponent } from "calendar-library";

// ...

<Suspense fallback="Loading...">

<SchedulingComponent {...props} />

<CalendarComponent {...props} />

</Suspense>;Relay is built on Suspense, Zero doesn't have it yet but it should be relatively easy to add.

Summary:

- ❌ Foundry + OSDK

- ❌ Foundry + Workshop

- ✅ GraphQL + Relay

- ✅ Zero

Write synchronization

For components to compose with respect to writes, mutations triggered from one component should be automatically reflected in other components on the page without those components needing awareness of each other. This means components need to be writing and subscribing to the same frontend datastore.

Zero's sync engine inherently provides full write synchronization -- by solving data synchronization between users working on different machines you accidentally solve data synchronization between components on a single page. Relay maintains a normalized store, which means that it will handle keeping components that reference the same data in sync, but it doesn't have any real notion of concepts like filters or sorts or aggregations so if your query involves any of those you are left to orchestrate store updates in response to mutations yourself. Workshop does something similar to Relay but a bit more automatic. For example, it will realize after an Action that it might need to invalidate particular queries and will refetch them. But it doesn't do anything in the way of IVM so it does a lot more work than it needs to which results in both way more load on the database and a much worse user experience.

Summary:

- ❌ Foundry + OSDK

- ⚠️ Foundry + Workshop

- ⚠️ GraphQL + Relay

- ✅ Zero

Rich semantic modeling

Modern programming languages all have rich types and facilities for polymorphism, which are critical for expressing and maintaining programs. In a distributed object system we also need fine-grained mutations to express transactional business logic, and explicit relationships between object types.

GraphQL actually does a pretty good job here. Its type system is pretty rich, including arrays, nested types, enums, custom scalars, and nullability. It provides fine-grained mutations, and polymorphism through interfaces and unions.

Foundry's Ontology has an even more robust type system. It doesn't have unions (yet) but has a better version of interfaces than GraphQL that enables the same code to work across heterogenous organizations via interface property mapping (also see Cambria from Ink & Switch -- Ontology Interfaces can be thought of as degenerate version of the same abstract idea). For custom constrained types it has Value Types. For mutations it has Actions, which can be defined with code or with simple declarative rules. It has explicit relationship configuration via Link Types.

Zero's schema configuration is very limited in its type system right now, only allowing strings and numbers and booleans. It has relationships but doesn't have fine-grained mutations (yet), and doesn't have any polymorphism.

Summary:

- ✅ Foundry + OSDK

- ✅ Foundry + Workshop

- ✅ GraphQL + Relay

- ❌ Zero

Rich querying

Embracing a rich query language in our applications (e.g. SQL) rather than treating a database as a dumb

key/value store to be abstracted over lets us completely

avoid the traditional work of hand-crafting RPC endpoints for UI views. You might still write

query functions for custom logic, but no

more writing endpoints for getBook, getBooks, searchBooks. This obviously requires our database layer to

handle permissions, but securing data should be the responsibility of a database!

GraphQL goes a tiny bit in this direction in that it doesn't require us to define exact query patterns up front, but it's not really a rich query language in that it has no concept of filters, sorts, aggregations, unions, intersections, etc.

Zero's ZQL is specifically a rich query language, as is Palantir's Object Set API that OSDK is built on.

One obvious critique of embracing rich query languages is concern for intentional DDOS attacks and unintentional DDOS from someone forgetting to add the right database indexes. Relay has a "persisted queries" feature as an answer to this that could also be applied to rich query languages, where query shapes for an application are statically analyzed before deployment so that queries at runtime can be restricted to those shapes. In fact, "ability to lock queries down to only expected forms for security" is on the Zero roadmap. On the database indexes point, I really think we should move past manually enumerating database indexes anyway and towards either (1) indexing everything (a la Elasticsearch, Foundry, Firebase) (2) using static analysis of the queries in an application (which is enabled by embracing a rich query language!) to automatically figure out what indexes we should create or (3) automatically maintaining indexes at runtime based on actual queries (a la AlloyDB).

Summary:

- ✅ Foundry + OSDK

- ✅ Foundry + Workshop

- ❌ GraphQL + Relay

- ✅ Zero

Looking to the future

Embracing a rich query language lets us avoid isolating the database tier. Embracing rich semantic modeling we've grown to expect from modern programming languages lets us avoid isolating the logic tier. Foundry's Ontology system does both of these already, enabling composability at the application level that helps drive the marginal cost of integrating the next application towards zero. A sync engine that provides read composability and write synchronization achieves the final piece, enabling composability at the component level, which lets us avoid isolating the client tier (on top of just making UI programming generally way easier to reason about).

Returning to our original example, Calendly is nominally a $3B business and does around $300M in revenue. Given that Calendly is little more than a scheduling widget and it's not even the only player in that market, our economy obviously places a lot of value on that particular UI component integration. And the end result isn't even good! With the right programming environment we can recapture all this value for organizations actually doing things. Multiply this out over the hundreds of SaaS products that organizations try to stitch together to function (even more if you consider transitive dependencies), and it seems we might be able to run efficient organizations with far fewer resources than we do now, which could have a radical effect on how we organize our entire economy and society.

If you want to chat about any of this please reach out!